Machine Learning Algorithms

1. Supervised learning (지도 학습)

Learns from being given "right answers"

used most in real-world applications , most rapid advacements and innovation

learn x(input) to y(output mapping)

give learning algortihm examples to learn

-particular type of Supervised learning Algorithm : Regression(회귀)

Regression : predict a number of infinitely many possible outputs

- Training set : Data used to train the model

- X = 'input' variable, feature

- Y = 'output' variable, 'target' variable

- m = number of training examples

- (X,Y) = single training example

- (X(i), Y(i)) = i th training example ** 제곱이나 루트가 아니니 헷갈려하지 말것 Index일뿐

- 'y-hat' = estimate or prediction for y in ML ** if Y, This is a target : actual true value in the training set

- Model = function f (X -> f -> Y^)

- f_wb = f(x) = wx + b ( w,b : parameters, Coefficients, weights) (w = slope(기울기) , b = intercept(절편))

- y-hat은 선형회귀선 위의 점. 'X' Marks means y^(i)

How to represent model f ?

** Python - .shape를 입력하면 (행렬의 차원수, 하나의 원소에 포함된 element갯수)를 반환 ,

따라서 .shape[0] = 행렬의 차원수, shape[1]= 하나의 원소에 포함된 element 갯수

Cost Function J_wb (비용함수, 손실함수) also called squared error cost function **w에 대한 함수 , f는 x에 대한 함수!!**

- commonly used for linear regression

y-hat = fx^(i) + b , that is

Goal is minimize the J(w,b) (해당 값이 낮을 수록 Good Model)

Gradient Descent (경사하강법)

Algorithm that can use to try to minimize any function , not just cost function for linear regression

여러개의 parameter가 존재할때 cost function을 minimize하기 위해 사용

- Start with some w, b

- Keep changing w, b to reduce J(w,b)

- Until settle at or near a minimum ( minimum은 한개 보다 많을 수 있음)

How to get Gradient descent Algorithm

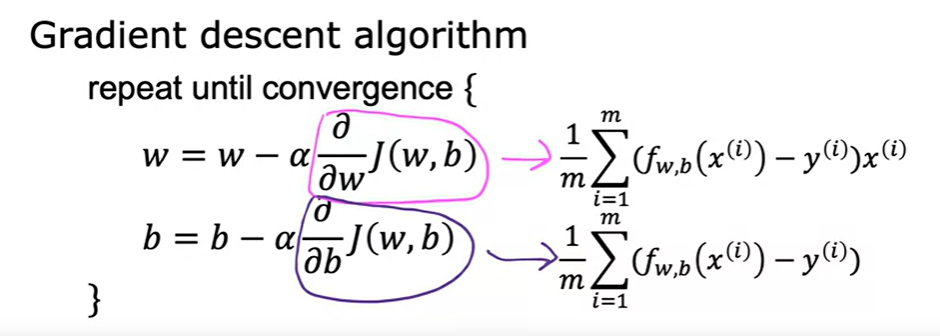

W(Updated Parameter) = w - (a *d/dw J(w,b)) , B(Updated Parameter) = b - (a*d/db J(w,b))

- Repeat until convergence

- a : learning rate 0<a<1 . if alpha large, very aggressive gradient descent procedure, small alpha; baby steps

if learning rate too small, 매우 작은 변화를. Gradient descent may be slow

elif learning rate too large, 매우 큰 변화. Gradient descent may : Overshoot(초과), never reach min. Fail to converge(모여들다) , diverge(갈라지다)

- if W already minimum, New update W = w , d/dw(J_wb) = 0 (slope = 0)

or get nearer a minimum, Derivative(미분) becomes smaller , Update steps become smaller , Can reach minimum without decreasing learning rate a

- d/dw J(w,b) : direction, size want to take

- Simultaneously update w and b

To be more precise : Use 'Batch' gradient descent (Batch = 집단)

- Every steps of gradient descent.

-Second major type of Supervised learning Algorithm : Classification (분류)

Classification : predict categories (non- numeric) (ex. dog, cat) , small number of possible outputs

-2. Unsupervised learning (비지도 학습)

Given data that isn't associated with any output labels y

Find something interesting in ualabeled data

Data only comes wtih inputs X, but not output labels Y.

Algorithm has to find structure in the data.

-Clustering Algorithm

Takes Data without labels and tries to automatically group them into clusters

Group simliar data points together (ex. Google news : related news)

-Anomaly detection

Find unusual data points

-Dimensionally reduction

Compress data using fewer numbers

3. Recommender systems

4. Reinforcement learning

'Deep Learning' 카테고리의 다른 글

| StarGAN-V2 구현하기 (0) | 2022.12.26 |

|---|---|

| HigherHRNet : Model 구현 (0) | 2022.11.20 |

| WSL 시간 동기화 하기 (0) | 2022.11.06 |

| Paper Review : HigherHRNet (0) | 2022.11.05 |

| Linear Regression with Multiple variables (0) | 2022.07.18 |